I would like to live in a world in which the real estate agent [information finder (an "explorer" that uses AR)] and the transaction platform are all (or nearly all) digital.

Funda Real Estate, one of the largest real estate firms in the Netherlands, was (to the best of my knowledge) the first Layar customer (and partner). Initially developed in collaboration with our friend Howard Ogden 3 years ago, the Funda layer in the Layar browser permits people to "see" the properties for sale or rent around them, to get more information and contact an agent to schedule a visit.

A few hours ago, Jacob Mullins a self-proclaimed futurist at Shasta Ventures, shared with the world on TechCrunch how he came to the conclusion that real estate and Augmented Reality go together! Bravo, Jacob! I think the saying is "In real estate there are three things that matter: Location. Location. Location." Unfortunately, none of the companies he cites as having "lighthouse" examples are in the real estate industry.

Despite the lack of proper research in his contribution, property searching with AR is definitely one of the best AR use cases in terms of tangible results for the agent and the user. It's not exclusively an urban AR use case (you could do it in an agricultural area as well) but a property in city-center will certainly have greater visibility on an AR service than one in the country. The problem with doing this in most European countries is that properties are represented privately by the seller's agent and there are thousands of seller agents, few of whom have the time or motivation to provide new technology alternatives (read "opportunity").

In the United States, most properties appear (are "listed") in a nationwide Multiple Listing Service and a buyer's agent does most of the work. Has a company focused and developed an easy to use application on top of one of the AR browsers (or an AR SDK) using the Multiple Listing Service in the US?

My hypothesis is that at about the time the mobile location-based AR platforms were introduced (mid-2099), the US real estate market was on its way or had already imploded. People were looking to sell, but not purchase property.

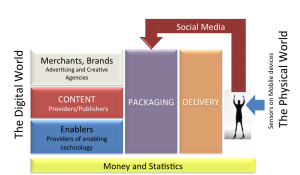

This brings up the most important question neither raised or answered in Jacob's opinion piece on TechCrunch: what's the value proposition for the provider of the AR feature? Until there are strong business models that incentivise technology providers to share in the benefits (most likely through transactions) there's not going to be a lot of innovation in this segment.

Are there examples in which the provider of an AR-assisted experience for Real Estate is actually receiving a financial benefit for accelerating a sale or otherwise being part of the sales process? Remember, Jacob, until there are real incentives, there's not likely to be real innovation. Maybe, if there's a really sharp company out there, they will remove the agents entirely from the system.

Looking for property is an experience beginning at a location (remember the three rules of real estate?), the information parts of which are delivered using AR. Help the buyer find the property of their dreams, then help seller component, and YOU are the agents.