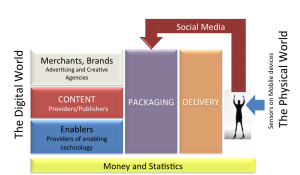

Customers value what they pay for and (usually) pay for what they value. If customers do not pay for value, is the business model at fault?

This basic question is at the root of a current polemic facing Facebook. Companies in other markets as well, for example AR, must answer the question or die. I feel that the business model is not always the root of the problem. The take home message of this post is that just because a business model doesn't work for one company the first time its tried doesn't mean the same or a similar system will fail for another company in a different geographic context, or at another point in time, after users have been through an educational process.

Three years ago, around 2 PM in the afternoon of February 11, 2009, I sat in the audience of a session on social networking at the Mobile World Congress. I had already been focusing on mobile social networking for several years (since mid-2006) and remember its "birth" as a mobile Web site and an iPhone application that made it easier to upload photos and notes, and to exchange messages on the site, and a look-up function for phone numbers.

Facebook mobile was a year "old" and I had been following along since its birth in 2008. I wasn't alone. The rest of the world read about Facebook's rapid mobile growth in a mashable blog post, Gigaom blog post and an article on BusinessWeek.com.

At that time, Facebook operated these mobile services worldwide:

The number of users and the options for accessing the social network were quite similar in size and approach to where the mobile AR industry is today.

In the nine months that followed from January 2009, when there were a mere 20M mobile Facebook users, to September 2009 when the network had reached 65M mobile users, Facebook implemented what it called the "Facebook Credits" platform. It is now available using 80 payment methods in over 50 countries.

Facebook was alert to the fact that it was not monetizing user traffic and open to experimenting with business models. The PaymentsViews post on August 25, 2009 (which I've preserved below in case of catastrophic melt down), shows just how hard it was to use on mobile and how creatively Facebook pushed its Credits program.

For a variety of reasons, Facebook failed in its first attempts to monetize the mobile platform. First, there was and there remains friction in the mobile payments system. We've learned since the introduction of smartphone applications that even if it is phenomenally easier on a smartphone than on a feature phone, users don't want to take time to click through multiple screens to authorize a payment. There are dozens of companies that are working to make payments easier on mobile.

Then there was just a lack of creativity in the goods which were offered. In Japan, precisely the same business model worked relatively well and mobile social networks flourished where Facebook could not break in because the Japanese youth culture was more mobile saavy and the digital goods were far more innovative, dynamic and valuable to the customers. Other reasons for abandoning the Facebook Credits system include that the desktop business model is so lucrative.

in February 2012, a piece in the New York Times reminded us that although it now has more than half of its 845 million members logging into Facebook daily via a mobile device, it is still not monetizing its mobile assets.

If a company with an estimated pre-IPO valuation of $104 Billion is unable to figure out a strong business model for mobile social media, where are AR companies going to look for their cash cow?

I predict that the answer will include something very close to Facebook's original mobile Facebook Credits concept.

The reasons I feel strongly in the future of a mobile commerce for information model are that the presentation of the digital asset will be more refined and the timing for the business model will be completely different. When companies will offer their information assets in a contextually-sensitive AR-assisted package for a fixed increment of time and in a limited location, the users will know what they're getting and only purchase what they need.

In addition, users will have been through several generations of commercial "education" on mobile platforms. in the months and years prior to digital assets (information, games, content) being sold in small increments, users will have learned that they can purchase their transportation fares with their devices, their movie tickets and even their beverages in a night club.

While it will certainly not be the only business model on which the mobile AR services and content providers will need to rely, the tight relationship between the user's willingness to pay for an experience and the value provided will bring about repeat usage and, eventually, widespread adoption.

———————————————————-

In case of meltdown of this company's site, I have taken the liberty of putting the full text of PaymentsViews post below:

Purchasing Facebook Credits with Zong Mobile Payments

by Erin McCune on August 25, 2009

At Glenbrook we believe that social eCommerce and virtual currencies are the new frontier of payments. Person-to-person transfers, charity donations, and micropayments for virtual goods (e.g. games, music, e-books, etc.) are exploding within social networks and as the 800-pound-gorilla in the social networking space, all eyes are on Facebook. Estimates vary, but $300-500 million in transactions may happen within Facebook in 2009 (note 1), although thus far precious few of those transactions are funded by a native Facebook payment mechanism.

A couple days ago I decided to send my colleague Bryan a birthday gift on Facebook and was startled to discover that Facebook now as an option to buy Facebook Credits, Facebook’s fledgling virtual currency, via mobile phone using Zong (more from Payments Views on Zong here). Developers on Facebook have accepted mobile payments for some time now, from Zong as well as other mobile payment providers, but the Facebook Gift Shop and Facebook Credits are Facebook services, not a developer product. And up until now (note 2) Facebook has only accepted credit card payments.

Being the payment geek that I am, I opted for the mobile phone payment option and took screen prints of the process flow. And then I wanted to compare the check out process via phone to the credit card check out process, so I bought Bryan a second gift (lucky Bryan) and took more screen prints. Continue reading to see a comparison of the check out process for the two payment methods.

But first, a little background…

The (Continuing) Evolution of Facebook Payments

- There has been a long standing Facebook Gift Shop where users can purchase virtual gifts for one another for $1 each. (Some sponsored gifts are free.) Users purchase “gifts” with a credit card: MasterCard, Visa, AmEx.

- December 2007: Rumored that beta test of payments system for applications was imminent. Developers were instructed to sign up to participate (and had to sign an NDA).

- November 2008: Converted gift shop dollars to “credits.” Each $1 buys 100 credits, so gifts that used to cost $1 are now priced at 100 credits. Still pay for Facebook Credits with a credit card.

- March 2009: Facebook claims to be “looking at” a virtual currency system.

- April 2009: Facebook introduces a limited pilot program whereby users can give credit to one another. If one user “likes” content that one of their friends has posted, the user can give them a virtual tip, using Facebook Credits. The only thing you can do with the credits is buy Gifts or give them to your other friends.

- May 2009: Facebook announces “Pay With Facebook” a new feature that will enable users to make purchases from Facebook application developers. Funding is via Facebook Credits, which can be purchased only via credit card.

- June 2009: Facebook began testing payment for virtual goods within Facebook using Pay With Facebooka nd Facebook Credits, starting with the GroupCard, Birthday Calendar, and MouseHunt applications.

- August 2009: Facebook announces that the Gift Store is conducting an “alpha test” of non-Facebook gifts in the Facebook Gift Shop, including some physical goods (e.g. flowers, candy).

- August 2009: It is now possible to purchase Facebook Credits with your mobile phone, via Zong.

Purchasing Facebook Credits via Mobile Phone

(Note: click on individual images to see larger version)

When I clicked on Bryan’s Facebook profile I was reminded to wish him Happy Birthday, and optionally, buy him a “gift”

Up until now, Facebook has only accepted payment via Credit Card for Gift Credits. But now it is possible to pay with your mobile phone. Note that the “pay with mobile” option is listed first.

I could select whether to purchase 15 ($2.99), 25 ($6.99), or 50 ($9.99) Facebook Credits and then prompted to enter my mobile phone number.

Meanwhile, I received a SMS text message from Zong providing a PIN number, confirming a payment of $2.99 to Facebook, and instructions on how to stop the payment or get help:

I entered the PIN number provided, waited a few moments, and then got a confirmation screen.

Finally, I received two confirmation SMS text messages from Zong (not from Facebook):

Purchasing Facebook Credits with a Credit Card

For Bryan’s second gift (a virtual beer, I am sure he would have preferred a real one!) I opted to pay with a credit card. Note the difference in price per Facebook Credit (more on that in a minute).

Next I entered my card details and was immediately presented with a confirmation screen. The process is definitely quicker (and cheaper) if you purchase via credit card.

Finally, when I purchased via credit card I received a confirmation email directly from Facebook (whereas with the mobile phone payment I received the confirmation via SMS text from Zong, rather than Facebook).

Pricing Varies by Payment Method

When I paid with my mobile phone the price per Facebook Credit was twenty cents. I only paid ten cents per Facebook Credit when I made my purchase with a credit card. Zong charges the merchant (in this case Facebook) a higher processing fee than the credit card companies do. This is not uncommon. Payments via mobile phone are typically for virtual goods (ring tones, avatar super powers, games, etc.) with relatively low cost of goods, thus merchants are less price sensitive. Once they’ve done the coding, every incremental sale above and beyond development costs is profit. Mobile payments for virtual goods cost between 20-50% of the transaction amount, with most of the fee being passed on to mobile phone carriers. Given this pricing structure, it is not surprising that Facebook charges more per Facebook Credit when you buy with your mobile phone. It is unclear how much of the net fee application developers receive and how much Facebook retains, and if the split varies depending on payment method.

Other Forms of Payment Within Facebook

Keep in mind that Facebook Credits are just one way of purchasing goods within Facebook. Today, Facebook application developers monetize their games and other applications by accepting payment directly using PayPal, Google, Amazon FPS, or SocialGold. Or developers may opt to receive direct payment via mobile phone via Zong, Boku, or another mobile payment provider. Virtual currencies that can be used across a variety of social networks and game sites include Spare Change and SocialGold. It is also possible to earn virtual currency credit by taking surveys and participating in trials offered via Super Rewards, OfferPal Media, Peanut Labs and many others. And finally, game developers in particular, often accept payment via a prepaid card sold in retail establishments, such as the Ultimate Game Card. The social and gaming web is exploding with virtual currency offerings, yet thus far no one model or payment brand dominates.

We’ll continue to monitor Facebook’s payment evolution and track the development of social eCommerce here at Payments Views. In the meantime, you might enjoy these related Payments Views posts:

Notes

- Facebook transaction value estimates here.

- Caveat: I am not quite sure when Facebook started accepting Zong – sometime after June, as that was when I last checked in. I suspect, but haven’t confirmed, that the change was made in conjunction with last week’s announcement that the Gift Store is conducting an “alpha test” of non-Facebook gifts in the Facebook Gift Shop, including some physical goods (e.g. flowers, candy). If anyone out there knows for sure, please let us know in the comments.

end of full text here.