Until a technology (or suite of technologies) reaches a critical mass market "mind share" (defined as there's high awareness among those outside the field but not necessarily mass market adoption), there's little attention given to legal matters. Well, the big exception I'm aware of in the domains I monitor is the attention that mobile network operators give to what goes over their infrastructure. Their sensitivity is due to the fact that operators are, under national regulatory policies, responsible for how their services are used and abused.

Although spimes are too diverse to be recognized as a trend by the mass market, Augmented Reality is definitely approaching the public consciousness in some countries. One of those is the United States. AR is on several 2012 top technology trend lists (post on Mashable, BBC ran a four minute video piece on AR in New York City during a prime time TV news magazine, hat tip to Brain Wassom for this one).

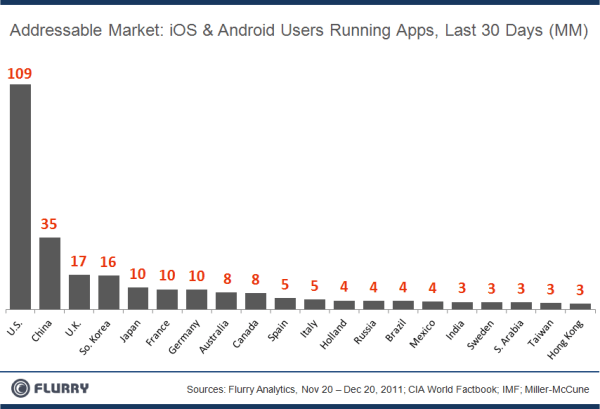

With over 50% of the US cellular users on smartphones (according to Flurry report quoted on Tech Crunch on December 27, 2011), and a very litigious society, Americans and American firms are likely to be among the first group to test and put in place laws about what can and can't be done when combining digital data and the physical world. In June 2011, during the third AR Standards Community meeting, an attorney practicing geospatial law in the US, Kevin Pomfret, spoke about the potential legal and regulatory issues associated with AR. He identified the potential liability associated with accidents or errors during AR use as one of the issues. Pornography and content for "mature audiences" was another area that he highlighted.

1. The first Licensing Model for AR Content. I'm a stickler when it comes to vocabulary. Although often used, the term "AR content" is a misnomer. What people are referring to is content that is consumed in context and synchronized with the real world in some way. In this light, there are really two parts to the "AR content" equation: the reality to which an augmentation is anchored, and the augmentation itself.

Wassom's point is that, in 2012, a business model around premium AR content will be proven. I hope this will happen but I don't see a business model as a legal matter. There is a legal issue, but it is not unique to AR. There certainly are greedy people who cross the line when it comes to licensing rights. Those who own the information that becomes the augmentation have a right to control its use (and if it is used commercially with their approval, to share in the proceeds). There's never been any doubt that content creators, owners, curators and aggregators all have a role to play and should be compensated for their contributions to the success of AR. However, when information is in digital format and broken down into the smallest possible unit, what is the appropriate licensing model for an individual data field or point? How those who provide AR experiences will manage to attribute and to license each individual augmentation is unclear to me. Perhaps the key is to treat augmentations from third parties the way we treat digital images on the web. If the use is non-commercial, it can be attributed, but there is no revenue to share. When the use is commercial, in "premium AR experiences," the entity charging a premium fee must have permission from the owner of the original data and distribute the revenues equitably.

2. The First Negligence Law Suit. Wassom believes that people whose awareness of the physical world is impaired by augmentations so completely that they are injured would feel that they can put the blame on the provider of the AR experience. He's got a point, but this should be nipped in the bud. I think those who provide platforms for AR (applications or content), should begin their experiences with a terms and conditions/disclaimer type of agreement. It may take one negligence or liability suit to drive the point home, but over time we will all be required to agree to a clause which releases the provider from responsibility for the actions of users. Why don't people publishing AR experiences preempt the whole problem and introduce the clause today?

3. First Patent Fight will be over Eyewear. I'm following the evolution of hands-free AR hardware and agree that it is not going to be much longer before these are commercially available. Nevertheless, it will require that more than 100,000 of these to be sold before it will be worth anyone's time and money to go after the provider of the first generation of eyewear for consumer use. In my opinion, it will take several generations more (we are already in the second or third generation) before the technology is sufficiently mature to be financially viable. So by that time, a completely different cast of characters will be involved. Why would anyone with patents in this area want to stifle the innovation in hands-free AR in 2012 with a patent fight?

4. Trademarks. I'm looking forward to the day when the term "Augmented Reality" completely disappears. If there begins to be trademark claims around the term in 2012, so much the better! Then people will begin to drop the term to refer to what will be commonplace anyway and we can accelerate the time necessary to just accept that this will be a convenient and compelling way to live.

5. AR frowned upon by family values and fundamentalist religious groups due to explicit content. It is clear that adult content drives the growth of many technologies and AR is unlikely to be different so I agree with Wassom that the adoption of AR in this market is inevitable. It is likely to provide a very clear business case for premium content so, from a financial point of view, this is all good. Having pedophiles using AR would not be surprising and certainly not desirable, but I don't think the technology making AR possible will be treated any differently from other technologies. It's not the technology to blame. It's human nature.