Due to a variety of factors, including the fact that the two biggest manufacturers are not publishing these figures, no one knows precisely how many smartphones shipped in 2011. Smartphone shipments are just part of the data which would be useful to guide where mobile AR efforts could have the highest impact in 2012.

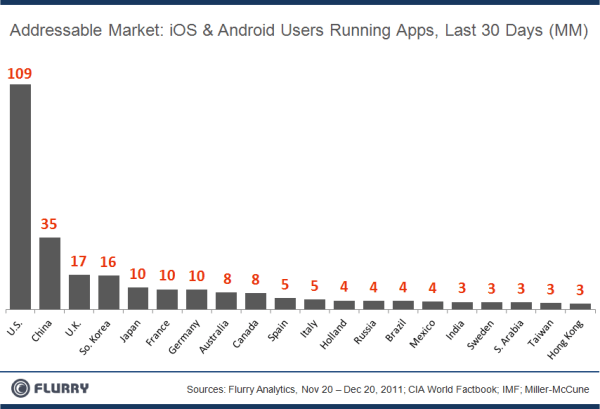

Let's assume that only iOS and Android users could have mobile AR experiences. Those who have smartphones need to know how to use them and see some benefits as regular users. Running apps at least once a month is a strong qualification. Take a look at the figure released by Flurry on December 23, 2011 (below) at the current total addressable market (TAM) for iOS and Android apps.

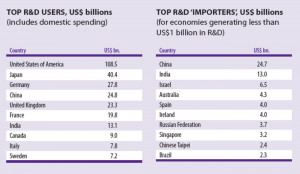

The largest chunk of the world’s active installed base is in the US, with 109 million out of 264 million, or 41%. But, the US is not the geography in which we have the greatest number of AR experiences available (experiences are more heavily concentrated in Western Europe). And, considering the conditions put on providers of geospatial information and the nascent state of that market, smartphone users in the next largest market, China, are unlikely to have a proliferation of AR experiences in 2012.

Now, look at the other Top 10 addressable markets in the second figure (below).

In addition to boosting the opportunities to engage users in the US, developers of mobile AR should be seriously expanding their AR publishing reach into Japan, Germany, France, UK and Italy.